How to build the best GLM/GAM risk models?

Introduction

Generalised Additive Models (GAMs) and especially their subset – Generalised Linear Models (GLMs) – are used to predict the actuarial cost in insurance pricing processes all around the globe.

They are not as accurate as machine learning models (such as Gradient Boosting Machine), but they don’t require specific Explainable Artificial Intelligence methods to understand how premiums were obtained. They are less prone to overfitting, fully auditable, and in most cases easily transformed into rating factors.

Usually, insurance companies have access to similar information about policyholders, their policies and claims. In order to outperform the competition, insurers can either look for new data sources, or focus on polishing their models on their current data. Having better predictive models than others, results in increased profitability and the market share at the same time.

Below, we briefly introduce the most common techniques used to create well-predicting generalised models, including:

• Feature selection,

• Data leakage prevention,

• Categories mapping,

• Engineering of numeric features,

• Dealing with low exposure in tails,

• Interactions,

• Geographical modelling,

• Regularisation,

• Automation.

Feature Selection

Once we have our modelling assignment understood, and we prepared all initial setup, i.e.:

• we cleaned our dataset,

• we selected the target and weight variables,

• we chose the family of probability distribution for the model,

• we decided on the train-validation split strategy,

we are good to start modelling.

Since we don’t want to have the same prediction (just the weighted average) for all observations, we need to add some features as explanatory variables. But which of them to choose? What to start with? This is the place where feature selection comes into play.

There are two basic, academic approaches to selecting features for the model:

• Forward Selection,

• Backward Elimination.

The first one states that one should be iteratively adding features to the model and monitor whether it improves or not. The other claims that one should initially add all features to the model, and then be dropping the least significant variables one by one to finish only with the set of the most significant features.

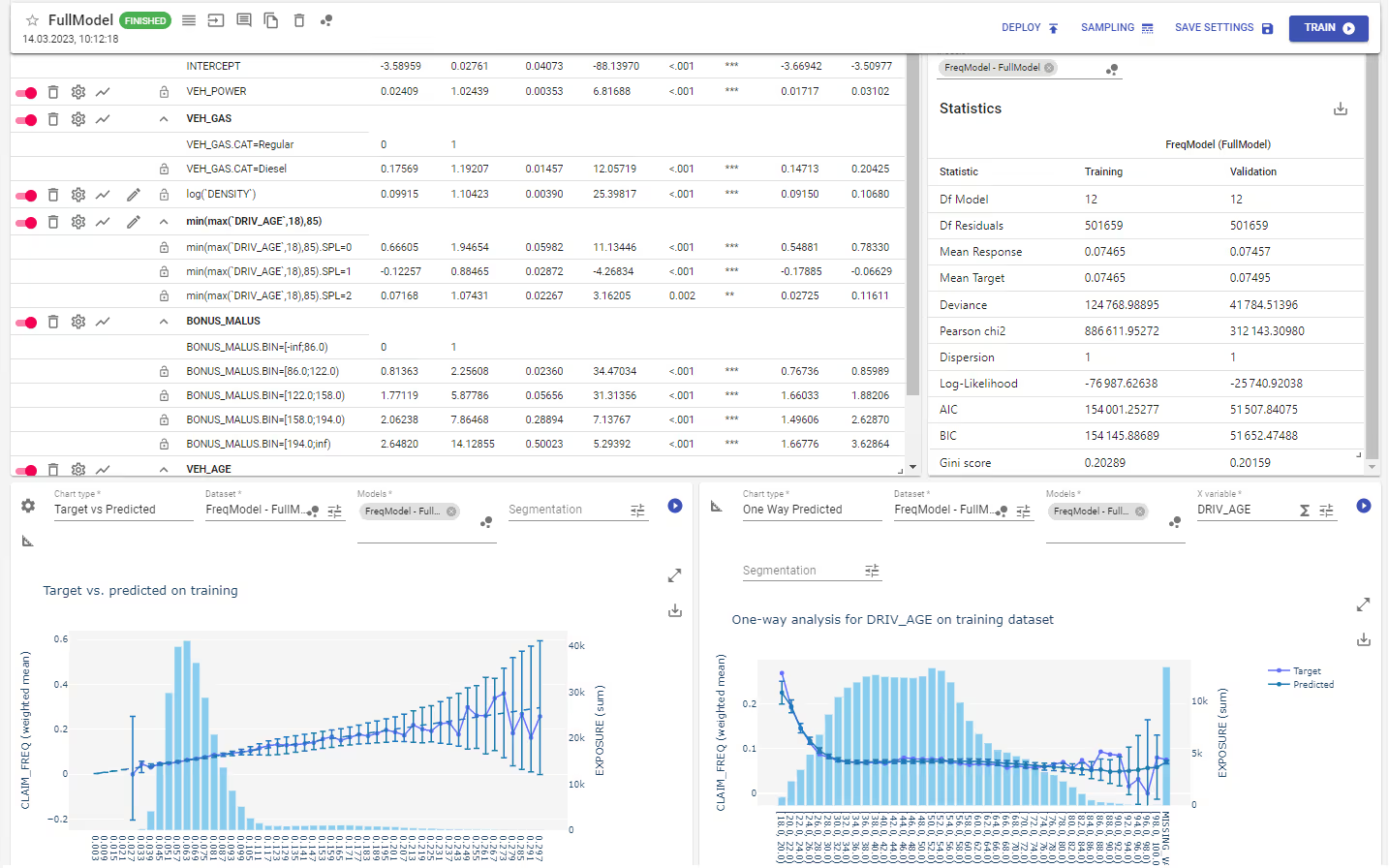

While the latter is really problematic for real cases, when we usually have plenty of columns in our dataset, Forward Selection – assisted with an exhaustive Exploratory Data Analysis (find more on EDA here) – may be a good way to start.

First variables shouldn’t be too specific or have high cardinality, so too many categories (while several or dozens are usually fine, hundreds or thousands are definitely out of the question). It’s recommended to start with the most general features describing policyholders or the subject of insurance.

Pricing experts know their modelling cases very well. Usually, they just know from their experience which set of variables should be included first in such models, because they are significant for this type of assignment (e.g. driver’s age, vehicle’s power, and claims history – for the Motor Third Party Liability coverage), or because there is a business need to include them.

The business expertise may help in choosing the first few variables, but when faced with hundreds of columns, actuaries need some other tools to effectively look for other significant features.

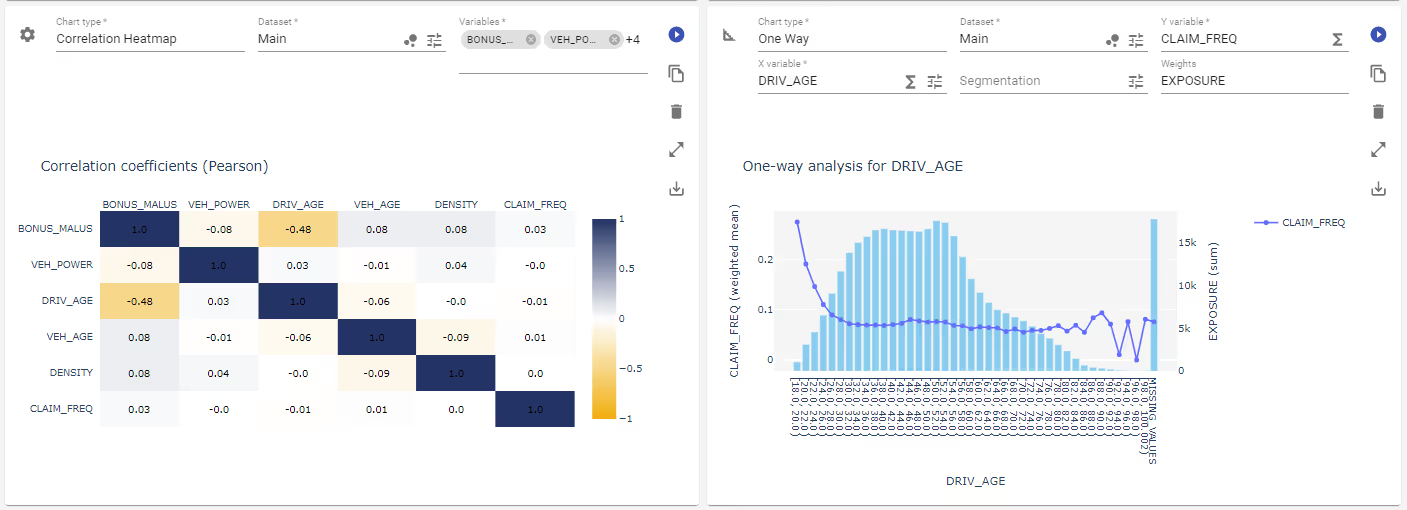

For example, One-Way charts are very helpful. When we see that the target’s values (Y variable) don’t form a flat line (or don’t look like a random noise for numeric features), but differs with respect to the X variable’s values, then most probably this X column will be a great base for a model’s feature.

Also, the correlation matrix may be used to find interesting features. All variables correlated with the target variable are worth considering in the model.

The theory demands variables in a model to be pairwise independent, but in real life it's rarely so. Still, selecting variables for a model, we should avoid including features highly correlated with each other.

Data Leakage Prevention

We should be aware of a situation called ‘data leakage’. It happens when our model makes predictions based on features that are not available when the model is used live. It causes the overestimation of the model’s predictive utility.

For instance, for a frequency of claims model the number of claims would certainly be one of the most significant explanatory variables, but using it is just wrong because it was used to prepare the target variable. To avoid such things, pricing actuaries should understand the meaning of all columns in their datasets, and know what the target variable represents, and how it was created. Additionally, a high correlation with the target should help to identify such unwanted features.

Having some variables selected for the model (of course the list may not be yet completed), we need to fit them properly.

Categories Mapping

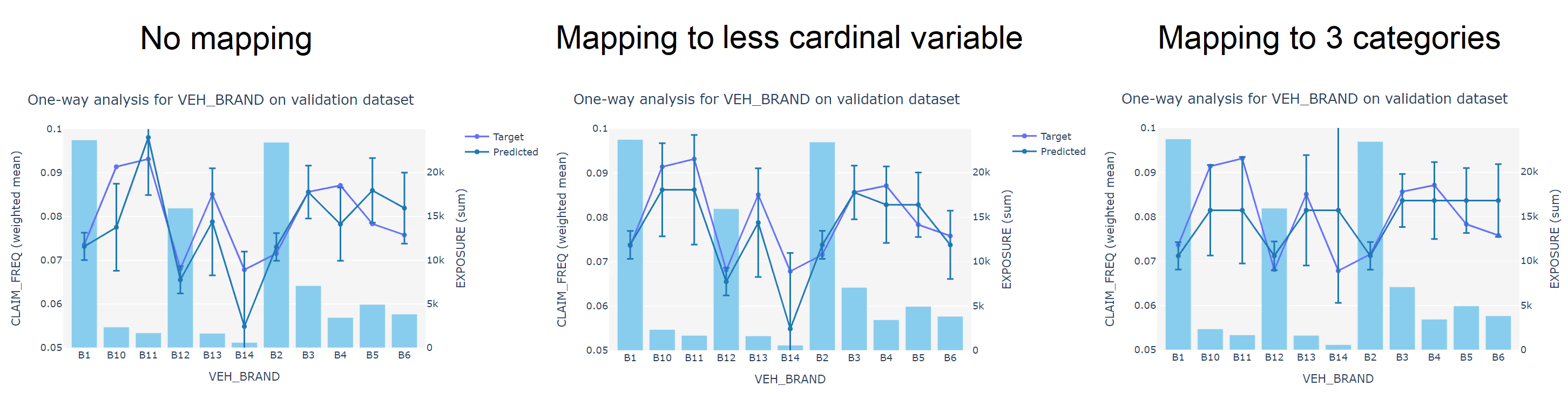

Our categorical features may be too cardinal. Take car makes or models as an example. Including all of them separately in your model would definitely result in overfitting. But how to group them to reduce the cardinality, and acquire a well-generalising model?

One method is to find a replacement for features with that many categories. For car makes we could think of mapping them to their producers’ countries, or segments like ‘exclusive’, ‘common’, etc. A so-called ‘lookup table’ prepared beforehand may be very helpful with such case.

Another way is to group them manually with respect to the average level of the target. One-Way chart is extremely helpful here. You have to be aware that this is not an a priori method (not an operation planned beforehand), so you are adjusting your model to fit your training dataset. Still, you are reducing the cardinality. It may lead to overfitting, so it’s always necessary to check whether this grouping is relevant also on your validation sample.

Based on your expert judgement and/or your findings with One-Way chart, you can use in the model only categories where the average prediction differs significantly from the average target, and where there is a decent exposure. Categories with little exposure usually shouldn’t be specified in the model unless they are grouped, e.g. to the ‘Other’ category.

According to the best modelling practice, a category with the highest exposure should be dropped – it will serve as a base customer profile and will be included in the intercept’s level of prediction.

Engineering of Numeric Features

A numeric feature needs a different treatment. We can just add it as it is, but then it will be included as a linear function, which means that all values that this variable may take will be multiplied by the same coefficient.

We can also reduce the number of different values by binning them into ranges, which then are encoded to separate binary columns – long story short, we transform this numeric variable into a set of categorical features. Then, instead of a linear prediction, we will get a couple of different levels. It may generalise our prediction, and is also a good way to prepare easy-to-export rating factors.

Sometimes we can see on a One-Way chart that the target’s values form a curve of a well-known shape, e.g. a logarithmic one. In such cases, we can add this variable under the transformation of such function. Logarithms (or square roots) are commonly used for features in which the target’s value increases rapidly at the beginning, but its right tail (the values on the right side on a chart) are much closer to each other. In some cases, one may also use an exponential or a power function, or any other mathematical function (or a combination of them). For example, features like population density may be nicely generalised by a logarithmic function, but it always depends on the case.

When we see that the target’s values for our numeric variable look partially polynomial, and they cannot be generalised well with one global function or a reasonable number of bins, we should use a spline transformation. It’s a piecewise polynomial function that helps to fit different curves to different segments of the feature. It’s very helpful, e.g. for fitting variables like the driver’s age, where young drivers’ behaviour usually significantly differs from their parents’ or seniors’. For some cases it may be important to ensure that a spline is globally monotonic.

Dealing with Low Exposure in Tails

For numeric variables it usually happens that there is very little exposure in tails (the smallest and the highest values). Handling them properly is a very important and tricky case.

For example, it may happen that you will see a drop in the actual frequency of claims for people who had the highest number of claims in the past 5 years. The increasing trend of spotted frequency with respect to this variable will be stopped at some point, because these few policyholders with the worst claim history happen to not have any claims last year.

Do we want to extrapolate this behaviour on every future customer with such amount of past claims? Do we want our premium model to break the trend and apply a relative discount for such high risk-prone policyholders? Certainly not. That’s when we should take into consideration transforming this tail manually, maybe by applying there a slightly increasing linear function (by fixing the coefficient).

Sometimes we may not be sure whether the trend should be maintained for tails, or maybe it should be stopped. In the latter case maximum and minimum functions may be applied on the variable. Such formula will ensure the same level of prediction for all values above the given maximum or below the given minimum. It serves as a cap and floor mechanism, and it is also used to handle unexpected extreme values in explanatory variables. Without it, such an extreme value would be multiplied by a feature’s coefficient, which may result in an enormous (or too small) and unfair premium.

Interactions

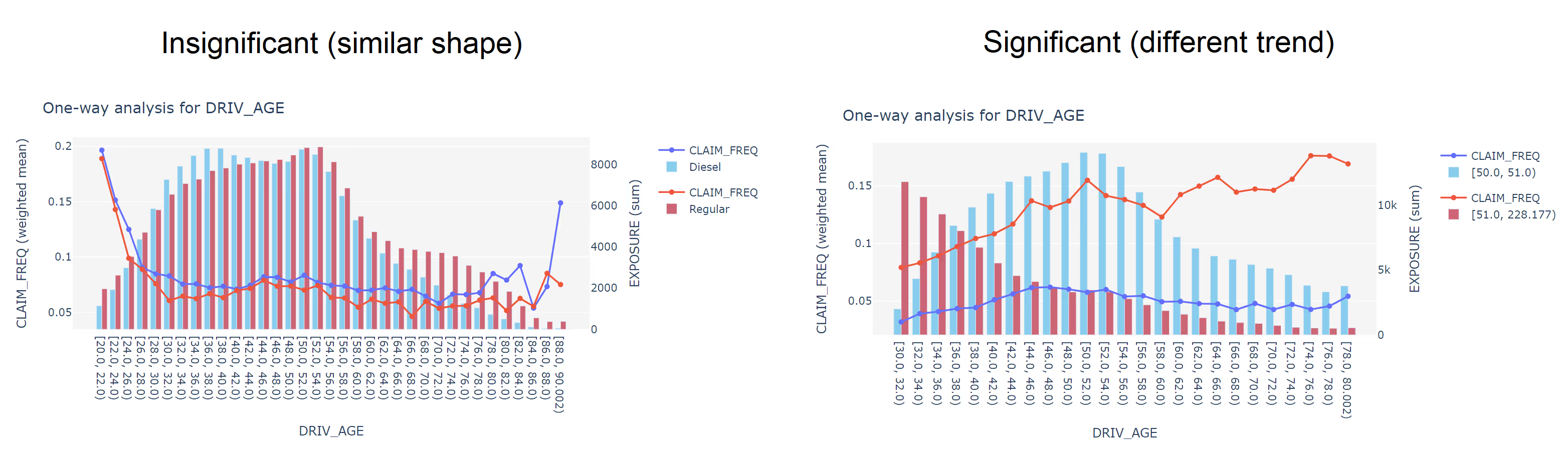

So far, we were discussing how to transform our features independently to generalise our target’s values best. Here we will consider two-dimensional effects, so combinations of two variables.

An interaction occurs when the effect of one variable on the target variable depends on another variable. In order to visually evaluate whether such significant interactions exist in our dataset, we can use a One-Way chart again, but this time with an extra segmentation.

Predictions from the model and the target’s values will be shown on the Y axis, one feature will be placed on the X axis, and another one will be used as a segmentation. If the target’s curves have a different shape (e.g. one is decreasing, while another one is increasing or flat), we may consider it as worth adding to the model in the interaction form. If the curves for different segmentation have a similar shape but differs on the average level of the Y axis (similar-looking curves but above or below one another), then there is no need to include it – such interaction won’t improve our predictions. The difference between the level of such curves will be handled without applying interaction.

Geography Modelling

In our datasets we usually have some geographical information about policyholders or the subject of insurance. They usually turn out to be highly significant for our models.

Actuaries very often have access to the most detailed information, to full addresses or at least ZIP Codes (Postal Codes). It is a common practice to group such numerous categories into less granular ones, such as regions, states, counties, etc. (depending on the country’s administrative units). The level of granularity that we should choose depends on the volume of our data – we don’t want to model on categories with too little exposure.

Actuaries sometimes, instead of using regions directly, look for some statistical information that describes them well, such as the population density. It provides much smoother transition between predictions, i.e. in places around the administrative borders, and allows for a better differentiation.

A sophisticated software allows pricing actuaries to very easily apply two-dimensional splines. This is the most granular method of modelling geography – polynomial transformations are applied on latitudes and longitudes to create a very detailed net of different relativities all over the country, with geospecial dependencies maintained.

However, such 2D splines are not always a good idea. It is necessary to choose the granularity level with respect to the volume of your data. For small or medium portfolios it may be a much better idea to build models only on regions or statistical information connected to them. You should always take cautious not to overfit your model.

What’s more, splines tend to behave in an unexpected way close to the country borders, and don’t deal well with territorial inconsistencies, such as islands or colonies. The solution to such cases is to model geography on residuals.

Due to the fact that there are many different approaches to geography modelling, this is a great opportunity to outperform our competition’s models.

Regularisation

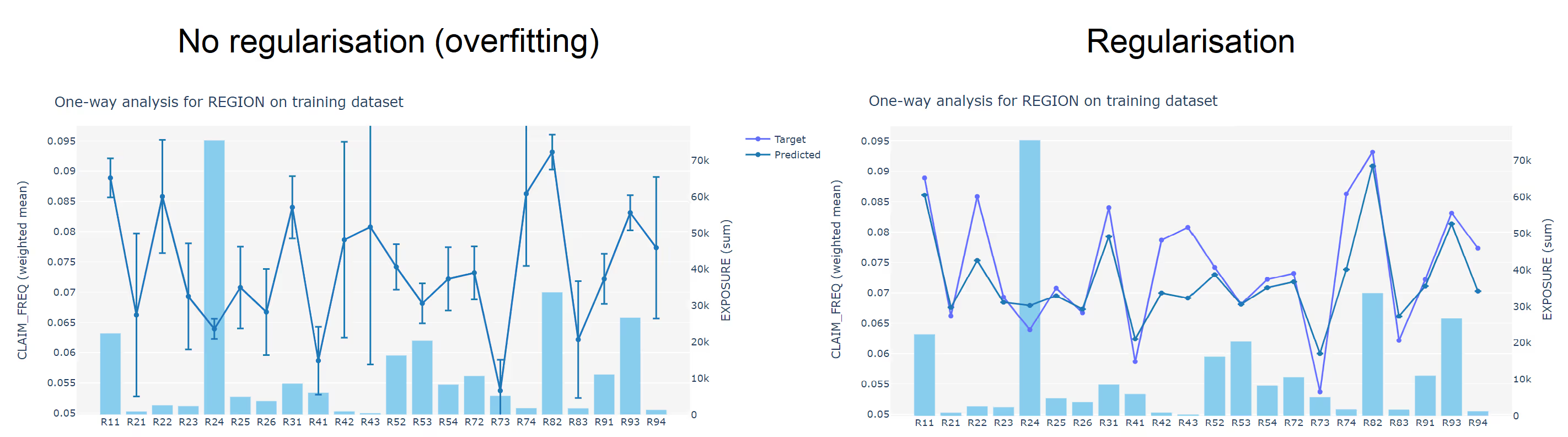

Sometimes it happens that there are categories with little exposure that we want to include in the model. In such cases, we cannot completely trust target’s values, because it may lead to overfitting of the model, which is only based on the available information. Including such low exposure categories may result in assuming a concrete, sample-specific behaviour to be a general one.

Regularisation is a method of applying the credibility theory in pricing. It’s an algorithm that adds penalisation to small categories in the way that predictions for these cases are adjusted to the grand weighted average of the target’s value. Let’s say that because of little exposure we don’t want to rely 100% on our data, but at the same time we don’t want to completely ignore the observed effect. So, with regularisation we will have it more or less included, but also adjusted to the average level.

Automation

With all of the above included, building GLM/GAM models manually sounds like a great experience, but usually our datasets consist of hundreds of columns. Examining all of them during EDA or the modelling step, especially if we need to process millions of observations, is a tremendous and a very time-consuming effort.

Luckily, the most troublesome steps, so:

• selection of significant variables,

• choosing optimal transformations for numeric features,

• identifying optimal binning and mapping,

• finding significant interactions,

can be easily automated with a proper software. Even a complete model can be created this way.

Of course, such automation won’t replace actuaries, but it will save days of their work, and such automatically generated model will be at least a perfect base to be later polished by a pricing expert. Please bear in mind that such algorithms are only numerical methods, they don’t have your expert judgement skill and don’t know your business assumptions. They are also much more prone to data leakege.

Conclusion

We briefly went through the most common techniques used to build well-predicting generalised models. Equipped with this knowledge you should try to apply them in your modelling assignments. Of course, not all of them are relevant for each case, but definitely they all are worth considering.

Knowing how to build predictive models, you should also check our previous post to learn how to evaluate them with different statistical measures and be capable of choosing the best model of many.

If you’re interested in an end-to-end pricing platform with all modelling capabilities described above, including the GLM automation, please contact us.

Did you enjoy this article? Follow us onLinkedIn if you want to be informed about our next posts: https://www.linkedin.com/company/quantee.